Engineering Case Study 2025-2025

Project Aeon - Modular Local LLM System

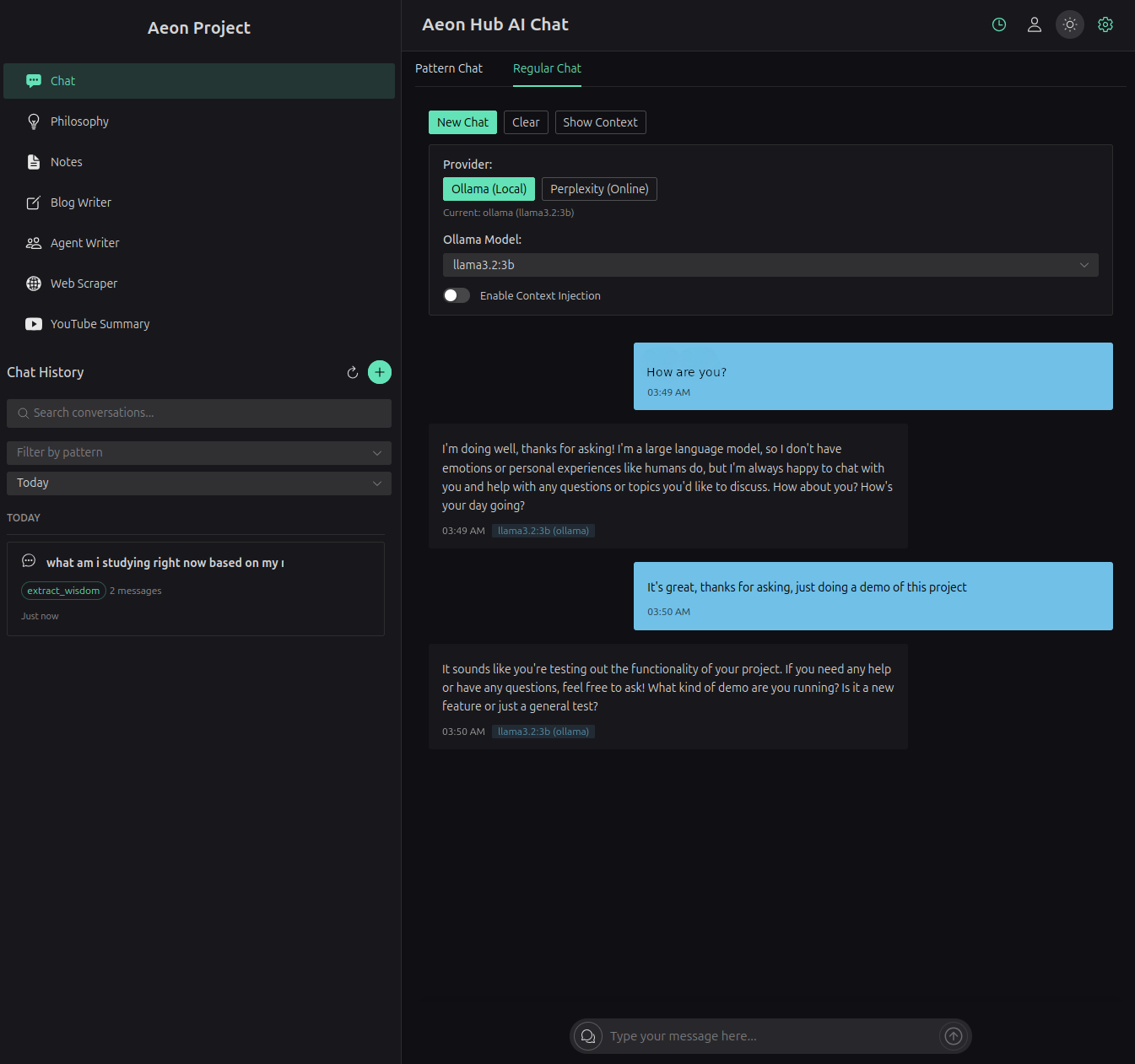

A personal offline AI assistant system where data remains local, built with FastAPI, ChromaDB, Vue 3, and Ollama. The project is still in active development.

Problem and Approach

A personal offline AI assistant system where data remains local, built with FastAPI, ChromaDB, Vue 3, and Ollama. The project is still in active development.

Technical Learnings

- Vector database usage and semantic retrieval workflow design

- FastAPI backend architecture for ML-oriented services

- Vue 3 Composition API implementation with TypeScript

- Local LLM runtime integration and iterative optimization

- Privacy-preserving architecture for offline AI use cases

- Interoperability patterns across multiple AI tooling layers